I recently finished reading Cynthia Brewer’s Designing better maps: A guide for GIS users. Within the book she had an example of making a bi-variate map legend manually in ArcMap, and then the light-bulb went off in my mind that I could use that same technique to make value by alpha maps in ArcMap.

For a brief intro into what value by alpha maps are, Andy Woodruff (one of the creators) has a comprehensive blog post on them on what they are and their motivation. Briefly though, we want to visualize some variable in a choropleth map, but that variable is measured with varying levels of reliability. Value by alpha maps de-emphasize areas of low reliability in the choropleth values by increasing the transparency of that polygon. I give a few other examples of interest related to mapping reliability in this answer on the GIS site as well, How is margin of error reported on a map?. Essentially those techniques mentioned either only display certain high reliability locations, make two maps, or use technqiues to overlay multiple attributes (like hashings). But IMO the value by alpha maps looks much nicer than the maps with multiple elements, and so I was interested in how to implement them in ArcMap.

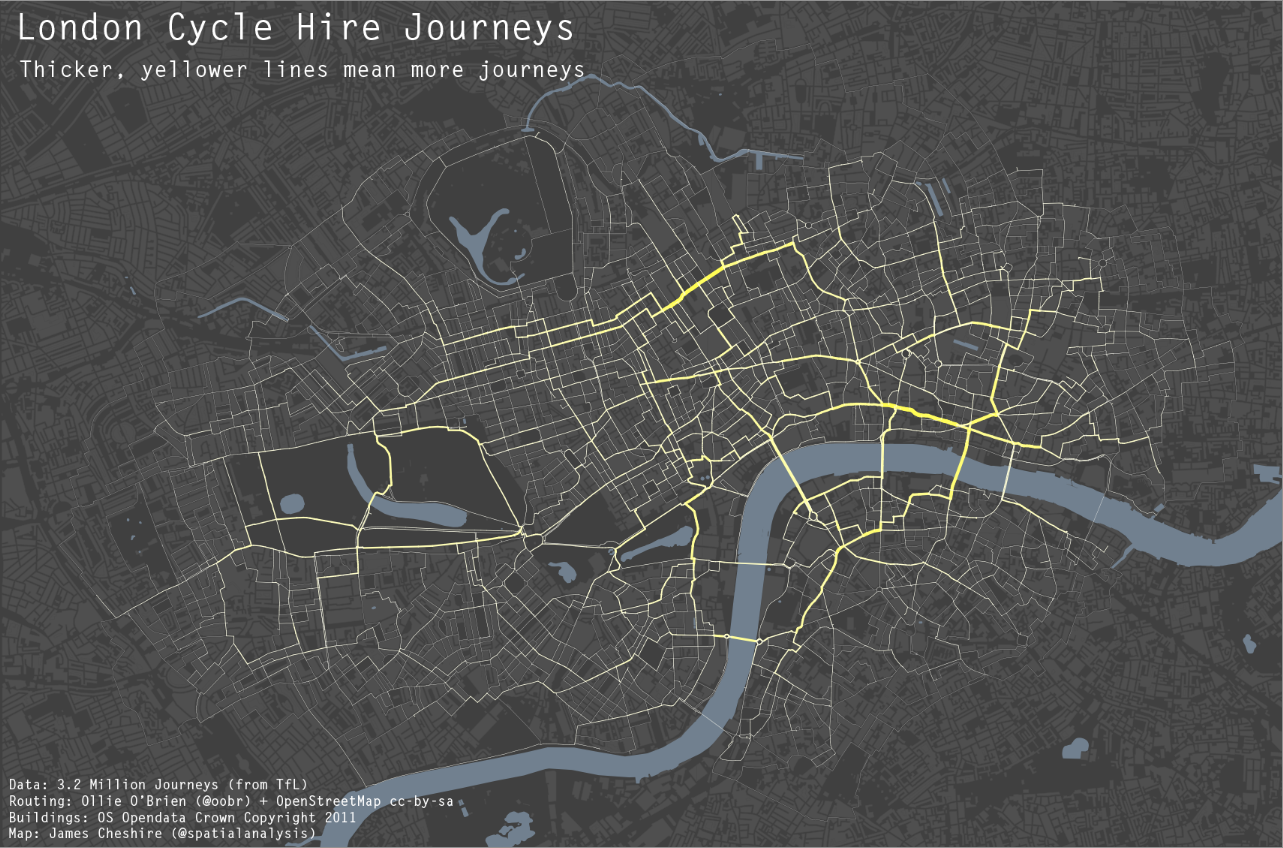

What value by alpha maps effectively do is reduce the saturation and contrast of polygons with high alpha blending, making them fade into the background and be less noticable. I presented an applied example of value by alpha maps in my question asking for examples of beautiful maps on the GIS site. You can click through to see further citations for the map and reasons for why I think the map is beautiful. But below I include an image here as well (taken from the same Andy Woodruff blog post mentioned earlier).

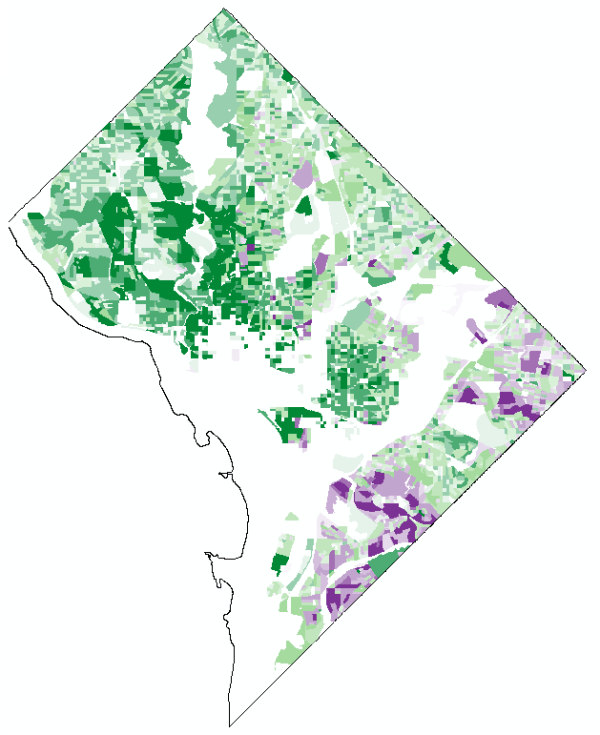

Below I present an example displaying the percentage of female heads of households with children (abbreviated PFHH from here on) for 2010 census blocks within Washington, D.C. Here we can consider the reliability of the PFHH dependent on the number of households within the block itself (i.e. we would expect blocks with smaller number of households to have a higher amount of variability in the PFHH). The map below depicts blocks that have at least one household, and so the subsequent PFHH maps will only display those colored polygons (about a third, 2132 out of 6507, have no households).

I chose the example because female headed households are a typical covariate of interest to criminologists for ecological studies. I also chose blocks as they are the smallest unit available from the census, and hence I expected them to show the widest variability in estimates. Below I provide an example on how one might typically display PFHH, while simultaneously incorporating information on the baseline number the maps will be map of.

The first example seperately displays the denominator number of households on the left and the percent of female headed households with children on the right both in a sequential choropleth scheme (darker colors are a higher PFHH and Number of Households).

One can also superimpose the same information on the map. Sun & Wong, 2010 suggest one use cross hatching above the the choropleth colors to depict reliability, but here I will demonstrate using choropleth colors for the baseline number of households and a proportional point symbols for the PFHH. I supplement the map on the right with a scatterplot, that has the number of households on the X axis and the PFHH on the Y axis.

These both do an alright job (if you made me pick one, I think I would pick the side-by-side sets of maps), but lets see if we can do better with value-by-alpha maps! The following tutorial will be broken up into two sections. The first section talks about actually generating the map, and the second section talks about how to make the legend. Neither is difficult, but making the legend is more of a pain in the butt.

How to make the value by alpha map

First one can start out by making the base layer with the desired choropleth classifications and color scheme. Note here I changed what I am visualizing from a sequential color scheme of PFHH to location quotients with only four categories. I will discuss why I did this later on in the post.

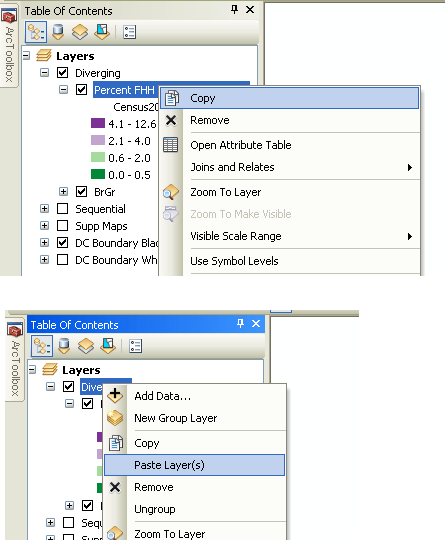

Then one can make several copies of that layer (right click -> copy -> paste within hierarchy), based on however many different reliability classifications you want to display. Here I will do 4 different reliability classifications. Note after you make them for management of the TOC it is easier to group them.

Then one uses selection criteria to filter out only those polygons that fall within the specified reliability range. And then sets the transparency for the that level to the desired value.

And voila, you have your value by alpha map. Note if after you make the layers you decide you want a different classification and/or color scheme, you can make the changes to one layer and then apply the changes to all of the other layers.

How to make the legend

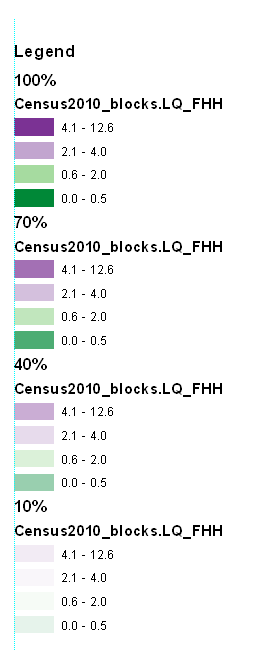

Now making the legend is the harder part. If one goes to the layout view, one will see that since in this example one has essentially for layers superimposed on the same map, one has four seperate legend entries. Below is what it looks like with my defaults (plus a vertical rule I have in my map).

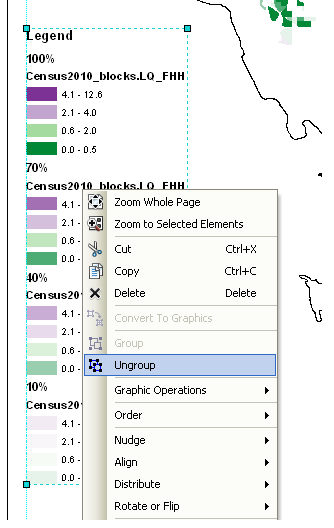

What we want in the end is a bivariate scheme, with the PFHH dimension running up and down, and the transparency dimension running from one side to the other (the same as in the example mortality rate map at the beginning of the post). To do this, one has to convert the legends to graphics.

The ungroup the elements so each can be individualy manipulated. Note, sometimes I have to do this operation multiple times.

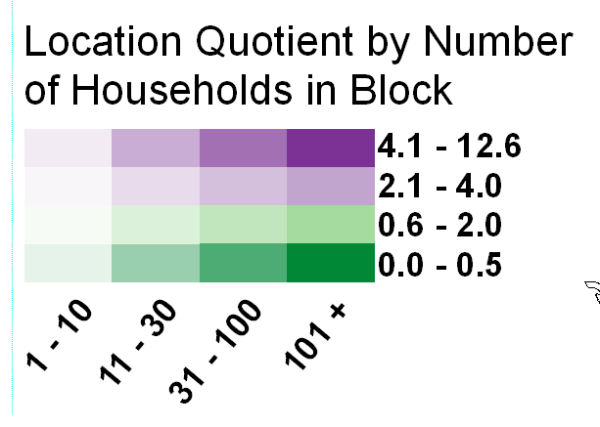

Then re-arrange the panels and labels into the desired format.

More tedious than making the seperate layers, but not crazy unreasonable if you only have to do it for one (or a small number of maps). If you need to do it for a larger number of maps a better workflow will be needed, like creating a seperate “fake inset” map that replicates the legend, making the legend in a seperate tool, or just making the map entirely in a program where alpha blending is more readily incorporated. For instance in statistical packages it is typically a readily available encoding that can be added to a graphic (they also will allow continous color ramps and continous levels of transparency).

And voila, here is the final map. To follow is some discussion about choosing color schemes and whether you should use a black background or not.