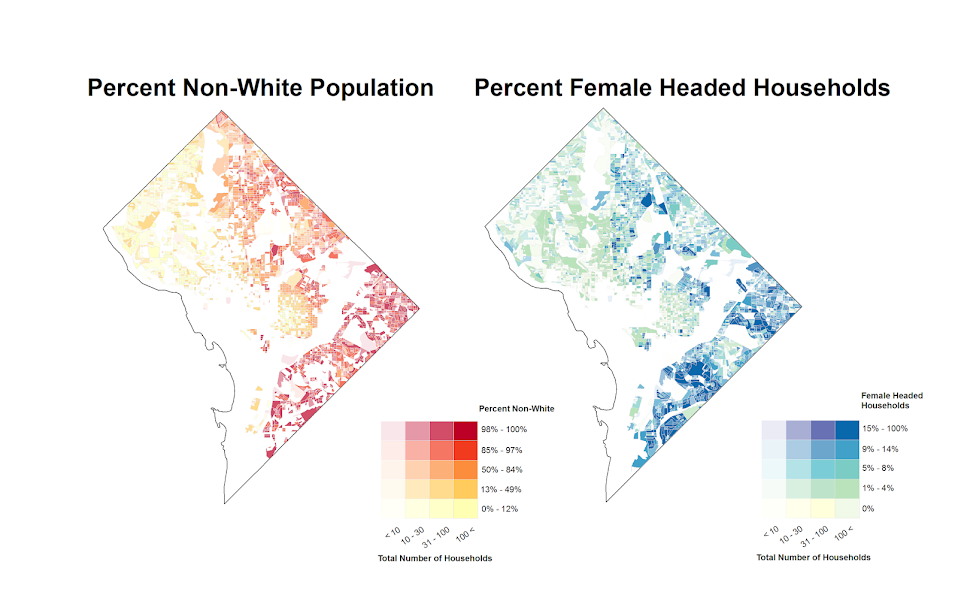

Word cloud of the frequency of words in Dr. Seuss’s Cat in the Hat via Jason Davies D3.js app.

I try to view the practice of data visualization with an open mind. In particular, I like to think that there is rarely a strict dichotomy of good or bad visualizations (or synonymously maps). It is a continual gradation, but we can use knowledge of visual perception to guide us as to visualizations that will be better for communicating particular information. Here I want to spend a few minutes talking about word clouds, and provide some things that they do good along with the bad.

So first, I will be focusing solely on the presentation of data in the form of the word cloud. Jacob Harris has a wonderful rant about why he hates word clouds, and this is focused on the fact that many word clouds are devoid of real meaning. You can present meaningless content in any graphical form you want – so I will not discuss this further.

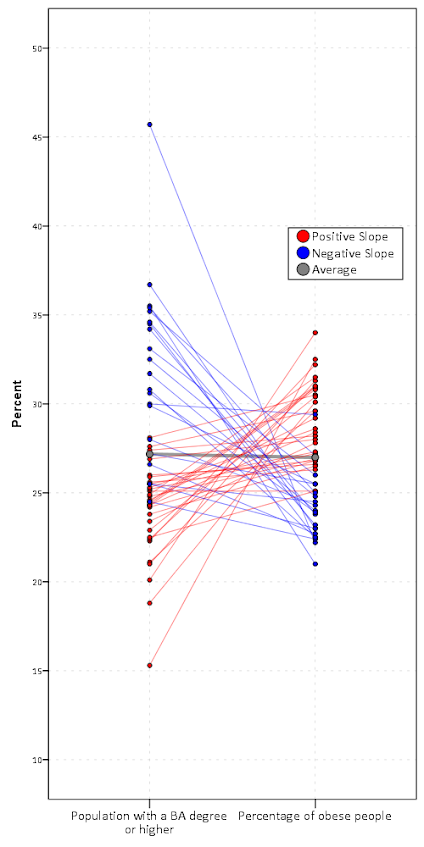

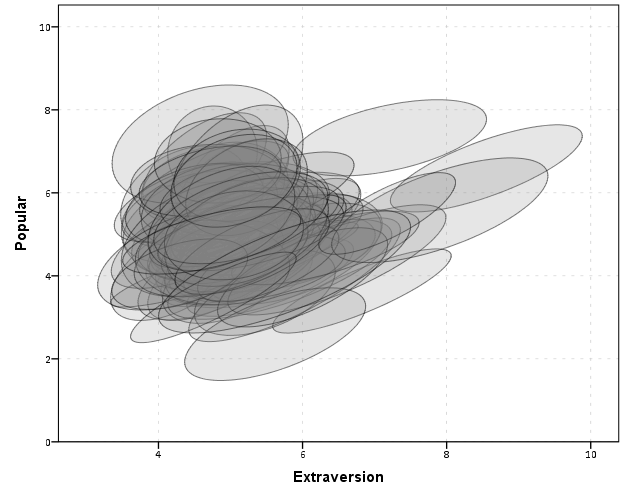

When we evaluate the utility of any particular graphic, one needs to be clear about the motivation for the graphic – what data the graphic is intended to display to its audience. With that goal in mind, then we can evaluate how well the graphic meets that goal. If the goal of a word cloud is intended as a graphical depiction of the entire distribution of words it is obviously inferior to a bar chart (or with a long tail a chart of the empirical CDF). The words in a word cloud tend to be all jumbled up and in no particular order, so it is difficult to see the distribution. If the goal is a quantitative estimate of the difference between two words, they also fail here because we know that area judgements are much more difficult than comparing lengths along an aligned axis. In addition, there is another confound in that the length of the word (or even particular letters with ascenders or descenders – depending on the font used) further confound the size differences between the words in the word cloud. That is, even if the font sizes are in the end the same, SomeReallyBigLongWord will appear larger in the graphic than a word such as tiny. Again bar charts are clearly superior for either of these tasks, and Marti Hearst has a nice PDF white paper discussing these short comings (via Stephen Few’s Perceptual Edge site), Whats up with Tag clounds. Also see Stephen Few’s critique of the use of packed circles in Tableu for a similar critique.

Given the shortcomings, there are two potential aspects of word clouds that I personally find appealing in terms of communicating information. They both have to do with focusing the attention on particular words, as opposed to focusing on the empirical distribution of words or on the size difference between the two words. The first aspect I would refer to the Stroop effect. Stroop’s experiment showed that reaction time for reading words that provided interfering mental stimulus slowed reaction time. Or more simply, it is easier to read red and blue than it is to read green and orange. So what does this have to do tag clouds? In tag clouds, the differentially sized words themselves are the data. There is no cognitive burden as in bar charts because one need not navigate between the label and the word.

The second aspect is related to the fact that in a particular graphic, there are certain elements that will dominate the readers attention. This is similar to creating a layering in a chart (that is often discussed in cartography), e.g. make elements you want to focus attention on in the chart come to the foreground, and other elements recede to the background. For one example Stephen Kosslyn argues that bar charts are preferable to Cleveland dot plots for presentations, as the bars have a higher visual recognition (e.g. stand out more or are more salient). Word clouds do this very well for the bigger words, while bar charts tend to be more appropriate for visualizing the entire distribution. That is the bigger words aren’t immediately more salient in the graph – the actual bars displaying the data are.

Based on these, I would suspect a user study of word clouds would outperform bar charts in the following aspects in terms of time taken to perform the task:

- Return the top three words in the graph.

- Given two particular words, which word is larger than the other word.

Or more simply, even though when evaluating areas we are not terribly effective at assigning areas, we can still rank order based on the size of the words. So in terms of rank order tasks I think word clouds will perform alright for simple comparisons. Given no time constraints, I would expect bar charts will always be more accurate, but depending on the nature of the task will take longer. For the top three words, if the chart is ordered one would need to read the top three and then reply. If the chart was unordered, one needs to take time to find them (this is where the interference and the Stroop effect comes into play). Most words clouds I see these top words are pretty much immediately recognizable, and so I suspect will be much faster for these tasks.

For the two particular words, I suspect it will boil down to in what format you can find the words the fastest. In the bar chart, you have to scan a list, whereas in a tag cloud it is unordered. With the exception of very tiny words in the word cloud, I bet you will find each word faster in a word cloud in most examples. (This is my guess, I know of no experiments confirming this specific scenario.)

So what does this mean in terms of visualizing data – either in the form of a tag/word cloud or a more typical form like a bar chart? For the bar chart, the bar is what is the most salient feature of the graph. If you want to draw more attention to the particular words, while still leaving the data intact, consider direct labels of the bars or maybe a more table like graphic. As always, if you can selectively filter out a larger amount of words, it will provide a simpler graphic to evaluate for the remaining items.

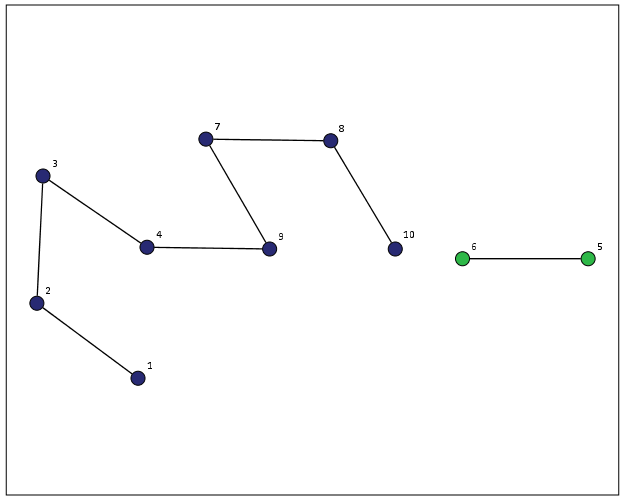

Can the tag cloud ever be a reasonable data visualization compared to a simple table or bar graph? I believe it can, but only if the typical layout algorithms are improved to incorporate ancillary information. Tag clouds are very similar to bin packing algorithms, and strike me as natively similar to force directed network layout algorithms (such as Dorling Cartograms). The layouts as used by Wordle or the Jason Davies implementation simply place the words by a convenient algorithm in a rank order importance. They pretty much just take the biggest word, place it, then go around in a circle to find a place for the next biggest word.

We can think of other ways to lay out the words though. CiteULike has a wonderful example where the words are laid out in alphabetical order, you can see my entire library tags here. Here is a screenshot of my tag library on the homepage, in which it is a smaller space, so they filter out the small tags:

The motivation for this is not to view the empirical distribution of the tags, but is for look up of particular tags. You can click on a tag and it brings up all of my entries in my library with that tag. If you don’t want to navigate to an additional site, simply take a look to the right aside of this WordPress template I use. There is a library of the tags I use on this site ordered alphabetically and filtered, but with the font sized in proportion to the amount of tags used in posts on this site. I find these uses of word clouds quite convenient, where I rather give priority to the words themselves, over specific quantitative estimates of the frequencies.

There are other potential ways to layout the words though that can hold other quantitative information. For instance, I could layout the tags in my CiteULike library according to shared articles. Coloring the words and the typical layouts in random order I find distracting, but given particular tasks (such as simple look up) I find they work just fine. Again because the focus is on the word, and not exact quantitative assessment of the magnitude (nor understanding of the overall distribution) I say tag clouds are better in this particular circumstance than a bar chart is. My arguments based on cognitive load and speed of surveying word clouds certainly does not make them appropriate for all data viz. tasks, but I believe it does for some.