Being out of academia for a bit now gives me some perspective on common behaviors I now know are not normal for other workplaces. Andrew Gelman and Jessica Hullman’s posts are what recently brought this topic to mind. Both what Jessica (and other behavior Andrew Gelman points out commonly on his blog) are near synonymous with my personal experience at multiple institutions. So even though we all span different areas in science it appears academic culture is quite similar across places and topical areas.

One in academia is senior academics shirking responsibility – deadwoods. This behavior I can readily attribute to rational behavior, so although I found it infuriating it was easily explainable. Hey, if you let me collect a paycheck into my 90’s I would likely be a deadwood at that point too! (Check out this Richard Larson post on why universities should encourage more professors to be semi-retired.)

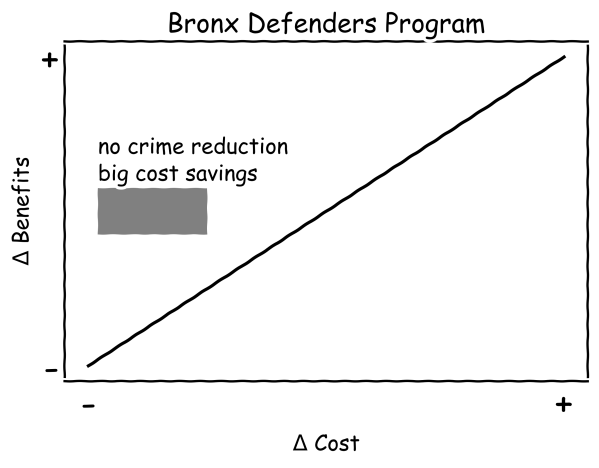

Another behavior I had a harder time wrapping my head around was what I will refer to as the culture of critique. To the extent that we have a scientific method, a central component of that is to be critical of scientific results. If I read a news article that says X made crime go up/down, my immediate thought is ‘there needs to be more evidence to support that assertion’.

That level of skepticism is a necessary component of being an academic. We apply this skepticism not only to newspaper articles, but to each other as well. University professors don’t really have a supervisor like normal jobs, we each evaluate our peers research through various mechanisms (peer review journal articles, tenure review, reviewing grant proposals, critique public presentations, etc.).

This again is necessary for scientific advancement. We all make mistakes, and others should be able to rightly go and point out my mistakes and improve upon my work.

This bleeds out though in several ways that negatively impact academics ability to interact with one another. I don’t really have a well scoped out outline of these behaviors, but here are several examples I’ve noticed over time (in no particular order):

1) The person receiving critiques cannot distinguish between personal attacks and legitimate scientific ones. This has two parts, one is that even if you can distinguish between the two in your mind, they make you feel like shit either way. So it doesn’t really matter if someone gives a legitimate critique or someone makes ad hominem attacks – they each are draining on your self-esteem the same way.

The second part is people actually cannot tell the difference in some circumstances. In replication work on fish behavior pointing out potential data fabrication, some scientists response is that it is intentionally cruel to critique prior work. Original researchers often call people who do replications data thugs or shameless bullies, impugning the motives of those who do the critiques. For a criminology example check out Justin Pickett’s saga trying to get his own paper retracted.

To be fair to the receiver of critiques, in critiques it is not uncommon to have a mixture of legitimate and personal attacks, so it is reasonable to not know the difference sometimes. I detail on this blog on a series of back and forth on officer involved shooting research how several individuals from both sides again have their motivations impugned based on their research findings. So 2) the person sending critiques cannot distinguish between legitimate scientific critique and unsubstantiated personal attacks.

One of the things that is pretty clear to me – we can pretty much never have solid proof into the motives or minds of people. We can only point out either logical flaws in work, or in the more severe case of forensic numerical work can point out inconsistencies that are at best gross negligence (and at worse intentional malfeasance). It is also OK to point out potential conflicts of interest of course, but relying on that as a major point of scientific critique is often pretty weak sauce. So while I cannot define a bright line between legitimate and illegitimate critique, I don’t think in practice the line is all that fuzzy.

But because critiquing is a major component of many things we do, we have 3) piling on every little critique we can think of. I’ve written about how many reviewers have excessive complaints about minutia in peer reviews, in particular people commonly critique clearly arbitrary aspects of writing style. I think this is partly a function of even if people really don’t have substantive things to say, they go down the daisy chain and create critiques out of something. Nothing is perfect, so everything can be critiqued in some way, but clearly what citations you included are rarely a fundamental aspect of your work. But that part of your work is often the major component of how you are evaluated, at least in terms of peer reviewed journal articles.

This I will admit is a harder problem though – personal vs legitimate critiques I don’t think is that hard to tell the difference – but what counts as a deal breaker vs acceptable problem with some work is a harder distinction to make. This results in someone being able to always justify rejecting some work on some grounds, because we do not have clear criteria for what is ‘good enough’ to publish, ‘justified enough’ to get a grant, ‘excellent enough’ to get an award, etc.

4) The scarlet mark. Academics have a difficult time separating out critiques on one piece of research vs a persons work as a whole. This admittedly I have the weakest evidence of widespread examples across fields (only personal anecdotes really, the original Gelman/Hullman posts point out some similar churlish behavior though, such as asking others to disassociate themselves), but it was common in my circle of senior policing scholars to critique other younger policing scholars out of hand. It happened to me as well, senior academics saying directly to me based on the work I do I shouldn’t count as a policing scholar.

Another common example I came across was opinions of the Piquero’s and their work. It would be one thing to critique individual papers, often times people dismissed their work offhand because they are prolific publishers.

This is likely also related to network effects. If you are in the right network, individuals will support you and defend your work (perhaps without regards to the content). Whereas if you are in an outside network folks will critique you. Because it is fair game to critique everything, and there are regular norms in peer review to critique things that are utterly arbitrary, you can sink a paper for what appears to be objective reasons but is really you just piling on superficial critiques. So of course if you have already decided you do not like someone’s work, you can pile on whatever critiques you want with impunity.

The final behavior I will point out, 5) never back down or admit faults. For a criminal justice example, I will point out an original article in JQC and critique in JQC about interaction effects. So the critique by Alex Reinhart was utterly banal, it was that if you estimate a regression model:

y = B1*[ log(x1*x2*x3) ]This does not test an interaction effect, quite the opposite, it forces the effects to be equal across the three variables:

y = B1*log(x1) + B1*log(x2) + B1*log(x3)Considering a major hypothesis for the paper was testing interaction effects, it was kind of a big deal for interpretations in the paper. So the response by the original authors should have been ‘Thank you Alex for pointing out our error, here are the models when correcting for this mistake’, but instead we get several pages of of non sequiturs that attempt to justify the original approach (the authors confuse formative and reflective measurement models, and the distribution of your independent variables doesn’t matter in regression).

To be fair this never admit you are wrong behavior appears to be for everyone, not just academics. Andrew Gelman on his blog often points to journalists refusing to correct mistakes as well.

The irony of never back down is that since critique is a central part of academia, you would think it would also be normative to say ‘ok I made a mistake’ and/or ‘OK I will fix my mistake you pointed out’. Self correcting is surely a major goal of critiques and I mean we all make mistakes. But for some reason admitting fault is not normative. Maybe because we are so used to defending our work through a bunch of nonsense (#2) we also defend it even when it is not defensible. Or maybe because we evaluate people as a whole and not individual pieces of work (#4) we need to never back down, because you will carry around a scarlet mark of one bad piece forever. Or because we ourselves cannot distinguish between legitimate/illegitimate (#1), people never back down. I don’t know.

So I am sure a sociologist who does this sort of analysis for a living could make sense of why these behaviors exist than me. I am simply pointing out regular, repeated interactions I had that make life in academia very mentally difficult.

But again I think these are maybe intrinsic to the idea that skepticism and critiquing are central to academia itself. So I don’t really have any good thoughts on how to change these manifest negative behaviors.